如何构造即时通信或视频帝国——云服务的集中爆发点

本文共 222 字,大约阅读时间需要 1 分钟。

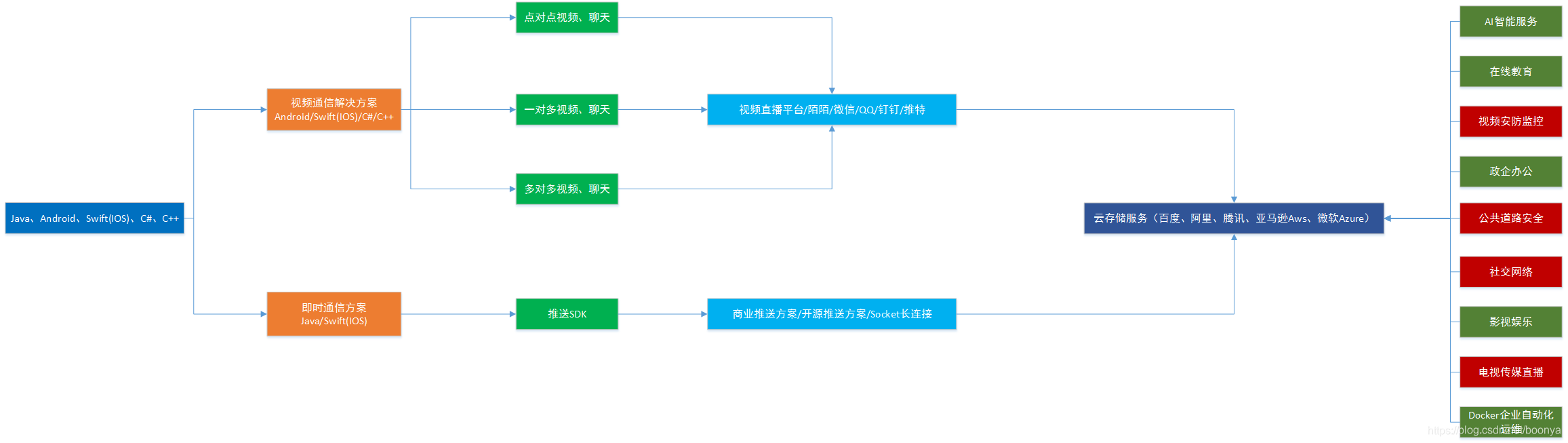

经过一段时间的思考,还是觉得做传统行业缺乏动力,沉浸在业务的海洋里可能会溺死。总结一下技术栈和相关的知识,也相当于是个普及吧。前两年视频直播很火,经过这几年的发展市场也在逐步地规范,尤其涉黄主播在直播领域泛滥成灾,也为视频行业的发展蒙上一层朦胧的薄纱,看不清楚未来该怎么发展。 但是除了这些,我们如果好好利用可以发掘很多传统行业对视频的需求还是很旺盛的。并且传统行业互联网+的概念和云结合之后几乎云无处不在了。

即时通信语言场景领域

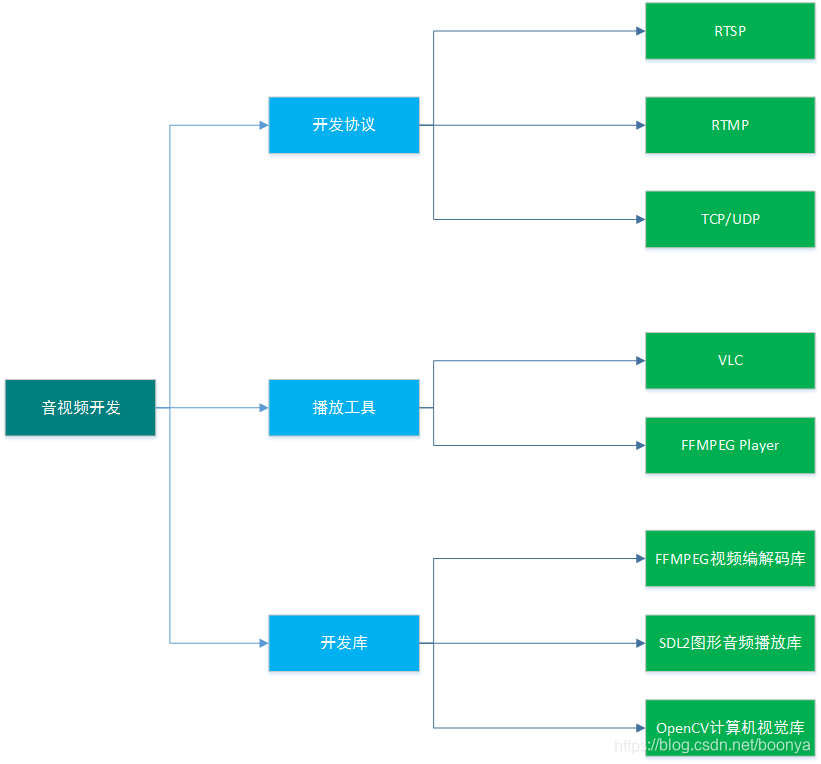

音视频开发

转载地址:http://qgxj.baihongyu.com/

你可能感兴趣的文章

NIO_通道之间传输数据

查看>>

NIO三大组件基础知识

查看>>

NIO与零拷贝和AIO

查看>>

NIO同步网络编程

查看>>

NIO基于UDP协议的网络编程

查看>>

NIO笔记---上

查看>>

NIO蔚来 面试——IP地址你了解多少?

查看>>

NISP一级,NISP二级报考说明,零基础入门到精通,收藏这篇就够了

查看>>

NISP国家信息安全水平考试,收藏这一篇就够了

查看>>

NIS服务器的配置过程

查看>>

NIS认证管理域中的用户

查看>>

Nitrux 3.8 发布!性能全面提升,带来非凡体验

查看>>

NiuShop开源商城系统 SQL注入漏洞复现

查看>>

NI笔试——大数加法

查看>>

NLog 自定义字段 写入 oracle

查看>>

NLog类库使用探索——详解配置

查看>>

NLP 基于kashgari和BERT实现中文命名实体识别(NER)

查看>>

NLP 模型中的偏差和公平性检测

查看>>

Vue3.0 性能提升主要是通过哪几方面体现的?

查看>>

NLP 项目:维基百科文章爬虫和分类【01】 - 语料库阅读器

查看>>